Tactile Sign Language

You’ve likely heard of Helen Keller, a deaf-blind woman who was taught to communicate by her teacher Anne Sullivan. There is a famous story about how she was taught to use communication when her teacher traced the letters of the word “water” on her hand and then held it under a running faucet, helping her attach the meaning of “cold and wet” with a word.

Many people are surprised to hear that those like Helen Keller are able to communicate with others using language, after all, seeing and hearing are integral to how we socialize for the majority of humans. Even most accessibility features assume the use of either one’s ears or eyes: those that are blind are usually read aloud to, and those who are deaf are provided with extensive captions, but this doesn’t leave much room for those who are deaf-blind.

Helen Keller (left ) communicated with her friends and companions by allowing them to sign while she lightly held their hand. This allowed her to feel the motion of the changing signs.

Sadly, Helen Keller’s communicative proficiency was an outlier at the time for people living with deafness and blindness. Historically, deaf-blind people have been under-resourced and wrongfully characterized as having a smaller capacity for using language. But deaf-blind people have figured out their own ways to communicate with each other. It’s called Tactile Sign Language. I’d like to bring attention both to the resilience of these individuals and the ability for the human body to adapt, not only to physical change, but to social and individual circumstance.

What Makes A Language?

Sign languages have likely been around for thousands of years even though most histories began recognizing them as languages starting in the 17th Century. Throughout much of history, they have been dismissed as inferior to spoken language in their abilities and the complexity of their function. Today, some might still assume that sign languages are less complex than spoken languages, but this is far from the case.

Let’s review our definition of language. There are dozens of definitions for language, but one of the most illuminating comes from Noam Chomsky where he defines language as, “the inherent capability of native speakers to understand and form grammatical sentences.” If he were to write this today, he would likely replace “speakers” with a more neutral word as not all language is spoken; however, this leaves a lot of wiggle room: what does it mean to understand and form grammatical sentences? What even is a sentence?

Questions like these could serve as the basis for a dissertation (and they indeed already have) but most linguists agree that a sentence is a domain made up of words that have special (grammatical) relationships between them. You might have been told that a sentence is a subject and a predicate, and for the most part this reflects many of our sentences, but more than this, a sentence expresses some type of observation about the world, born from a sequence of shared experience and individual understanding which finds form with words. So then, if a sentence is made of words, what is a word?

Words can be broken up into what are traditionally taught as “parts of speech” but which linguistics calls categories or classes. Each word belongs to a class which has special properties and these are what determine their placement within the sentence. For example, a noun has to have a relationship with a verb, either as its subject or its object, to create a grammatical sentence. The proper noun, “Bob,” and the verb, “runs,” are only words, but once they enter into a grammatical relationship with each other (i.e. Bob becomes the agent of the action the verb depicts) they become a sentence with a new meaning.

This is Your Brain on Language

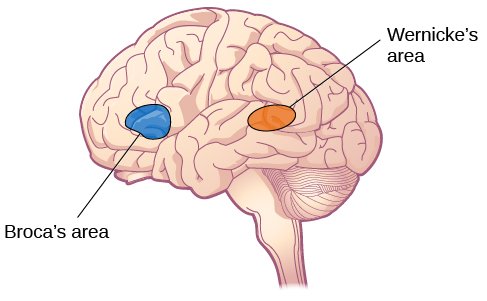

Remember how I said sign language has been misunderstood and unrecognized as equal to language? Research from the past fifty years shows that sign language actually uses the same exact parts of the brain as spoken language. The Broca’s area of the cerebral cortex is where language is produced in the brain and the Wernicke’s area is where it is processed. In other words, when someone makes signs or speaks language, the same part of the brain is used and the same is true when some sees someone else signing or speaking.

Broca’s area produces language, Wernicke’s area processes it for comprehension.

Sign language not only uses the same parts of the brain as spoken language, it uses all of the same structure explained above. Sign language has sentences made up of words that have classes and grammatical relationships with each other. The only difference between the two is the way in which the language is communicated, and this is because the words are not articulated with the voice, they are gestured using the body and primarily the hands and face. This is why I like Chomsky’s definition of language: language is not a product, it is a capacity. Our brains are built to use language, and they adapt to find new ways of using it if the voice is not available to them.

The band Magma, creators of Kobaïa.

In this way, something can be a language if our brains are able to see parts that can be combined and used for communicative purposes. Languages can technically be made from almost anything. This is part of the reason why I love experimental constructed languages, like the musical language Kobaïa made by the band Magma, which links language to a musical pitch and sonority system in order to reflect the way certain words and ideas make us feel.

The Qualities of Spoken Language vs. Visual Sign Language

Sign language is not the same as reading, of course. It doesn’t use static visuals, but instead shapes and movement. Linguistics conceptualizes language by breaking it down into parameters. For spoken language the parameters are as follows:

Manner - the way the sound moves through the vocal tract; a plosive sound like /p/ moves in the mouth in a different way from a strident sound like /s/

Place - the location of where the sound is being produced; a velar sound like /k/ is produced in the back of the mouth, whereas a labiodental sound like /f/ is at the front.

Voicing - whether or not the vocal cords are vibrating while the sound is produced; some sounds like /t/ and /d/ are the same in every way except whether voice is used.

Airstream - this is kind of a bonus since 73% of languages (including English) only use pulmonic consonants, meaning that we push air out of the lungs when we speak. Some languages make use of “implosive” or “ejective” sounds which build up pressure in the vocal tract. For sounds like this, air doesn’t just flow from the lungs, it actually gets pulled into the mouth or pushed out based on the downward or upward movement of the vocal cords. There are also “clicks” which use the tongue to build pressure.

When we combine these parameters together, we build an inventory of sounds (more than 150) that is more than capable of supplying the parts to build a language. Interestingly, no language on Earth uses all of the possible sounds the mouth can make, it’s too much work for no extra reward. We talk and process language quickly and intelligently, there is no need to use entirely new sounds just to avoid homophones like “break” and “brake” when they don’t realistically cause many comprehension problems.

Sidenote: there actually is a language that attempts to use all possible sounds, called Ithkuil. It is a constructed language and I am planning on making a post about it in the future!

Now that we know the parameters used by spoken language, what are those of sign language? Most sign language educators recognize five parameters for visual sign language.

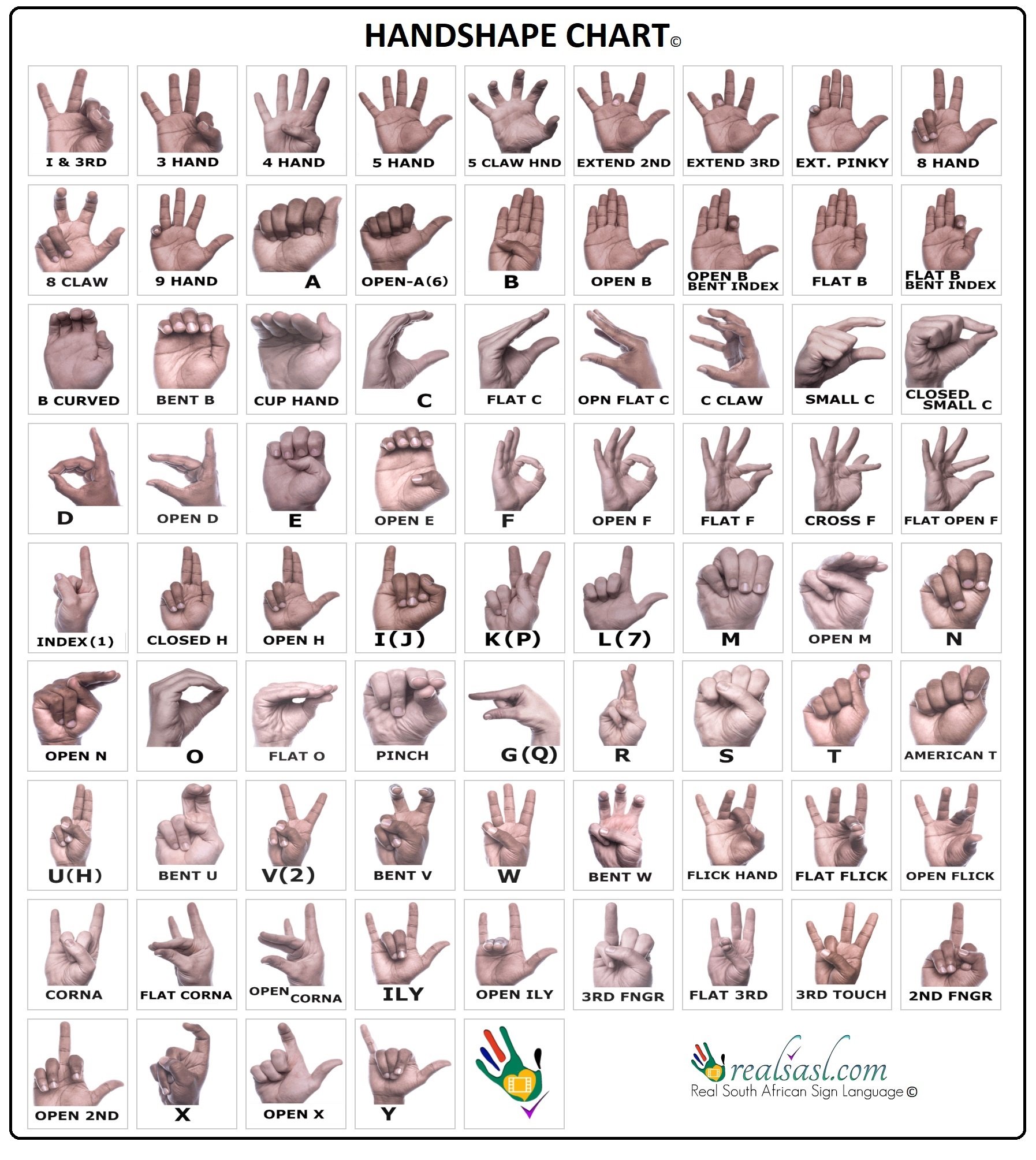

Handshape - likely the most varied set with over 50 unique possibilities, this refers to the positioning of the fingers, including the bend of the knuckles, fingertip touching, finger crossing, etc.

Location - where the hand is located; sign language uses locations on the upper body, face, in front of the body, against the chest, and many more.

Movement - how the hand moves; this movement might be forward or backward, to the left or right, up or down, a wave motion, or it might be a movement towards certain body parts.

Palm orientation - which directions the palms face; this could be palm-up, palm-down, towards the body, away from it, and more.

Non-manual/Expression - this refers to a change of expression, usually in the face like raised eyebrows or a crinkled nose, or a body movement.

Each sign can be described using these parameters, and they are important to learn as one tries to acquire proficiency in sign language. But, what about those that want to use sign language not only because they’re deaf, but because they can’t see? Finally, we get to the main topic at hand (pun intended).

Tactile Sign Language

In a lot of respects, tactile sign language wouldn’t exist in the same way without visual forms of sign language, as the two share a lot of overlapping in terms of the parameters we have discussed above. Even though I will be discussing tactile signing as a whole, most of my research has been informed by sources focusing on Protactile, often called PT, as it is likely the most widely used form of tactile sign language (at least in the US).

Protactile sign language in action, the individual on the left is the “speaker” and the individual on the right is the “listener.” You can see the use of contactspace in the way the woman’s palm and fingers are utilized by the man.

Visual sign language makes use of what is called airspace, the area around the body which is used as the medium for signs to be performed and interpreted. Tactile sign languages cannot do this in the same way because most people who use them are not able to see, or they have difficulty with visual aspects of signing. Instead of airspace, tactile sign languages makes use of contactspace. This essentially makes the body and the skin a medium for communication. Language isn’t heard or seen, it is felt.

For someone who speaks language, this might seem very limited or confusing, but it is important to remember that our brains speak primarily from the subconscious, as studies into stuttering and speech impediments have shown. Before learning or using language, our brains are already working to get us prepared to use it because they are built to recognize and process it. Language acquisition (like our native language) is sometimes not our choice! When deaf-blind people start to learn tactile signing, their bodies and brains react to it, developing subconscious knowledge, just as we do when we learn languages. This doesn’t mean that they don’t have to learn the languages, but it does mean that their bodies are likely more receptive to communicating through touch, and so this is a much more naturally occurring process for someone who can’t see or hear. Additionally, deaf-blind people often experience intense isolation, so a manner of communicating like this provides them with a new and more complex way to connect with others.

The nature of contactspace requires (at least at this point in time) for two tactile signers to actually be with each other physically so that they may touch one another. Because of this, phenomenon like the Covid-19 pandemic confront deaf-blind people with different challenges than language speakers or those who use visual signing; while Zoom can be used by the deaf, blind, and non-disabled fairly easily, deaf-blind people have not been able to participate in the same way. Additionally, this limits the use of airspace, particularly when two hands are used during signing because tactile signers do not have a way to ground themselves in airspace without a point of contact.

A huge difference between visual sign language and tactile sign language is that there are four arms involved in communication instead of just two. A sign language “listener’s” body is mostly passive while processing language. Aside from a head nod or shake, they simply need to look at the signs to get the message. With tactile signing, the “listener’s” arms must be utilized because of the concept of contactspace; if the “listener” is not touched somewhere (i.e. the arms, face, lap), there is no sensory information received to interpret the message. And so, this is why there are four arms involved, instead of just two.

A diagram of Protactile speakers; A1 is the “speaker’s” dominant hand; A2 is the “listener’s” dominant hand; A3 is the “speaker’s” non-dominant hand; A4 is the “listener’s” non-dominant hand.

As should be expected for instances of language, there are rules that govern this language use, particularly on the notions of dominant and non-dominant hands. The “speaker” must always keep their dominant hand attached to the non-dominant hand of the “listener.” This is done to ensure that there is a constant contactspace, but also because the “speaker” must use their dominant hand to communicate the most integral parts of a tactile sign. The “listener” uses their dominant hand like a nodding or shaking head: swiping the fingers back and forth indicates “no” and tapping them indicates “yes” although they are probably better translated as “I don’t understand” or “I am following along.”

Due to tactile signs being relatively new, these languages are still being actively constructed within deaf-blind communities. Historically, there have been a few different methods of tactile signing, such as the Lorm alphabet, although these have only been able to communicate rather short messages and they require signing words with letters according to their English spelling. Protactile is a leading form of tactile signing because it is a transformative way of communicating. Protactile users are in the process of adding words to their vocabularies that make use of contactspace across several parts of the body including the face, neck, head, chest, shoulders, stomach, lap, and more. The specifics of how tactile signs work are a bit hard to understand without a linguistic background because they don’t have as clear-cut parameters (plus research in this field is limited), so I won’t go much further into them, but if you would like to learn more, I suggest this video.

The idea of touching someone in order to communicate with them is both endlessly expressive, but it might sound a bit scary. Everyone has a different relationship with their body; some have extreme difficulty with being touched anywhere no less these sensitive places. Deaf-blind are extremely capable, though in many ways they are very vulnerable, and this is another reason why tactile signing is so important as it allows the deaf-blind to exercise autonomy and be understood by non-deaf-blind individuals.